The Twitter account known as SwiftOnSecurity deleted a short thread of tweets today stating that the vast majority of 4K resolution laptop screens could be scaled down to 1080p. This would improve performance owing to the reduction in pixels being refreshed, and those users would scarcely notice the difference.

Swift was referring to a display’s viewing distance, and that it directly affects how people perceive its pixel density. Apple capitalized on this with the iPhone 4’s Retina display. Their engineers figured out that, on average, people wouldn’t be able to see the individual pixels on an iPhone if held at a comfortable distance from their eyes. Nowadays most phones have high-resolution screens. Of course if you have visual acuity outside of the norm, you might see individual pixels, but that’s unusual.

A similar principle applies to computer monitors, television screens, and cinema displays. The further someone sits from a display, the less likely it is they will be able to discern individual pixels. Given that commonly-understood principle, it should come as no surprise that to most people sitting a certain distance from a 14″ laptop screen, there is little to no discernible difference once the pixels are a certain size and density.

Let’s define some technical stuff here. If you take a look at an average flat-screen TV or LCD computer screen (including most laptops), chances are they are in a widescreen format, most likely in a ratio of 16:9. The dominant resolution for these displays is 1080p, or 1,080 pixels down the vertical (and 1,920 pixels across the horizontal). This is called Full HD, or Full High Definition. HD TV uses this standard for broadcast, so it was an easy decision for computer manufacturers to adopt the standard.

Where 4K resolution differs is that it is measured across the horizontal, and even then it is only approximate. Competing standards use either 3,840 x 2,160 pixels, or 4,096 x 2,160 pixels. Even though this is only double the vertical number of pixels compared to 1080p, 4K sounds good in marketing materials, so here we are.

Swift’s original (deleted) tweet said that 4K is close enough to four times the resolution of Full HD (this is correct: you can fit almost four 1080p displays in one 4K display), so their suggestion to reduce a laptop’s resolution to 1080p and save 75% of the processing power of a graphics card is a reasonable trade-off for a lower image quality on a 14″ laptop screen. After all, the vast majority of people won’t notice, taking visual acuity, ambient lighting conditions, and viewing distance into account.

Now add people into the mix. Have you ever been to someone’s house where they watch old-style 4:3 TV shows stretched out to fill the Full HD screen, resulting in a stretched image? They probably haven’t realized that it’s stretched out. This happens a lot more than you realize, and I’ve seen it myself in various technical support roles where people are running weird resolutions. People hardly notice. Throw in poor eyesight and you have a recipe for most people not realizing or noticing that a screen is running at 1080p instead of 4K.

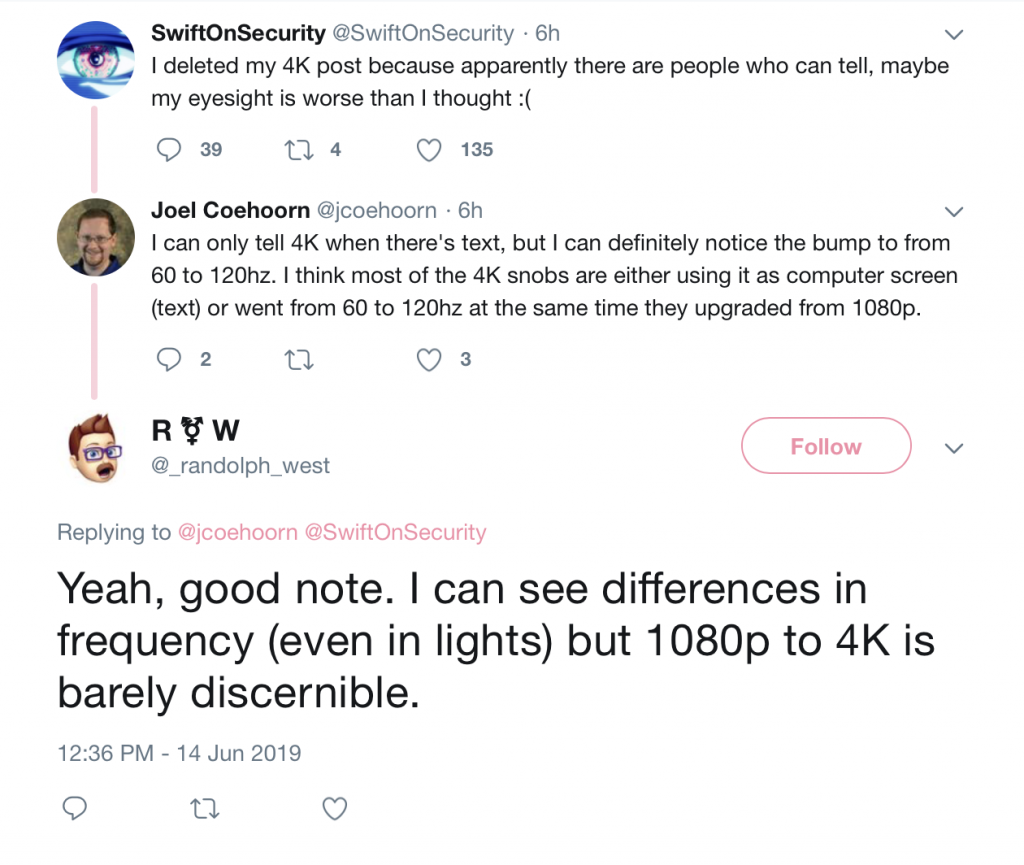

After a few replies (mostly people in tech) saying “well actually”, Swift deleted the tweets and posted a new one saying that apparently some people can tell the difference, and maybe their eyesight was bad. The responses to this were where Randolph enters the story. Someone else posted a comment saying that the bump from 1080p to 4K coincided with refresh rates (how quickly the picture redraws per second) from 60 Hz to 120 Hz, which might explain why people think they can discern 4K over 1080p. I said “Good note” and mentioned that I can tell the difference with refresh rates. That was that, I thought.

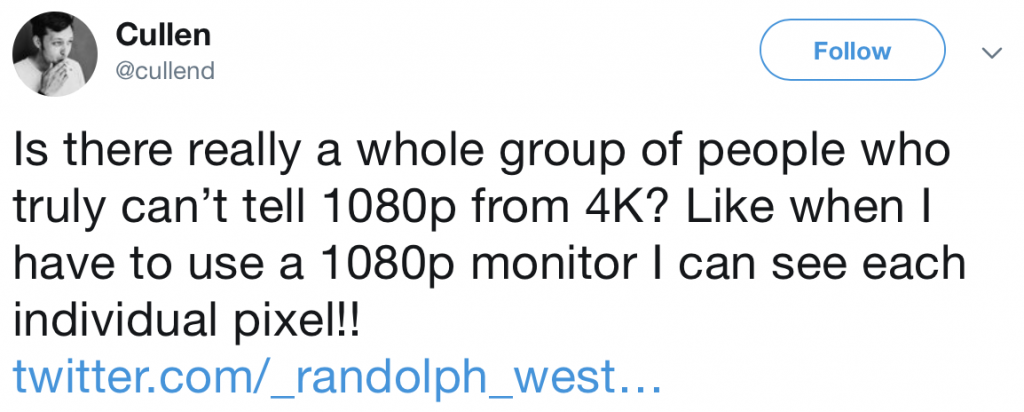

Suddenly, someone I didn’t know, and wasn’t aware of before today, quote-tweeted my post and asked incredulously:

Let’s ignore the entitlement of someone who might be forced to use a high-definition 1080p monitor. It must be tough, Cullen, having the privilege of using high-resolution monitors.

So I responded to Cullen from the point of view that my eyesight isn’t great, and explained viewing distance for certain sizes of computer displays under 27″, and for TVs under 70″, because I literally did this exercise in my own house recently to decide on the right size of TV for the sitting room.

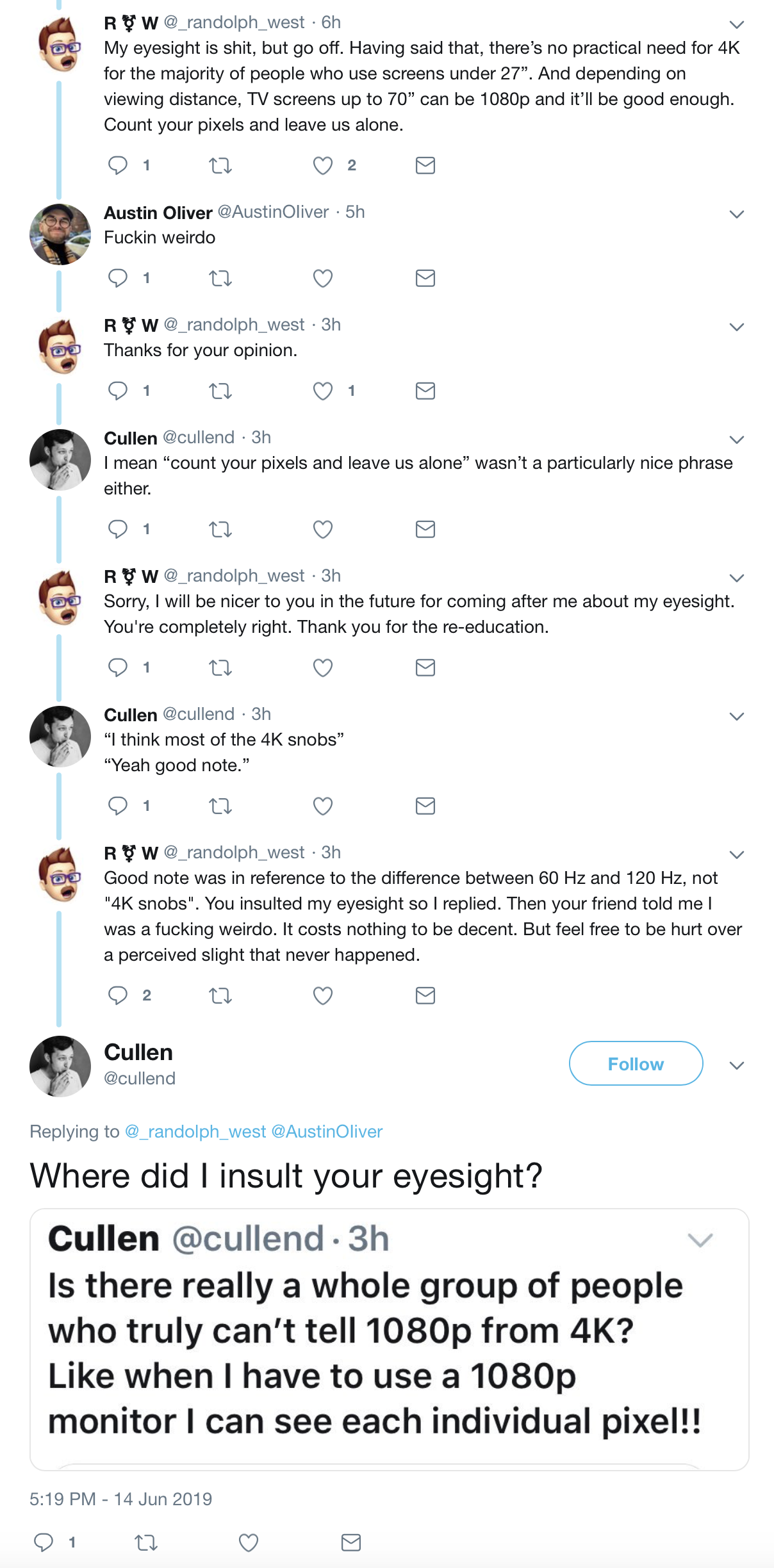

And then it got weird.

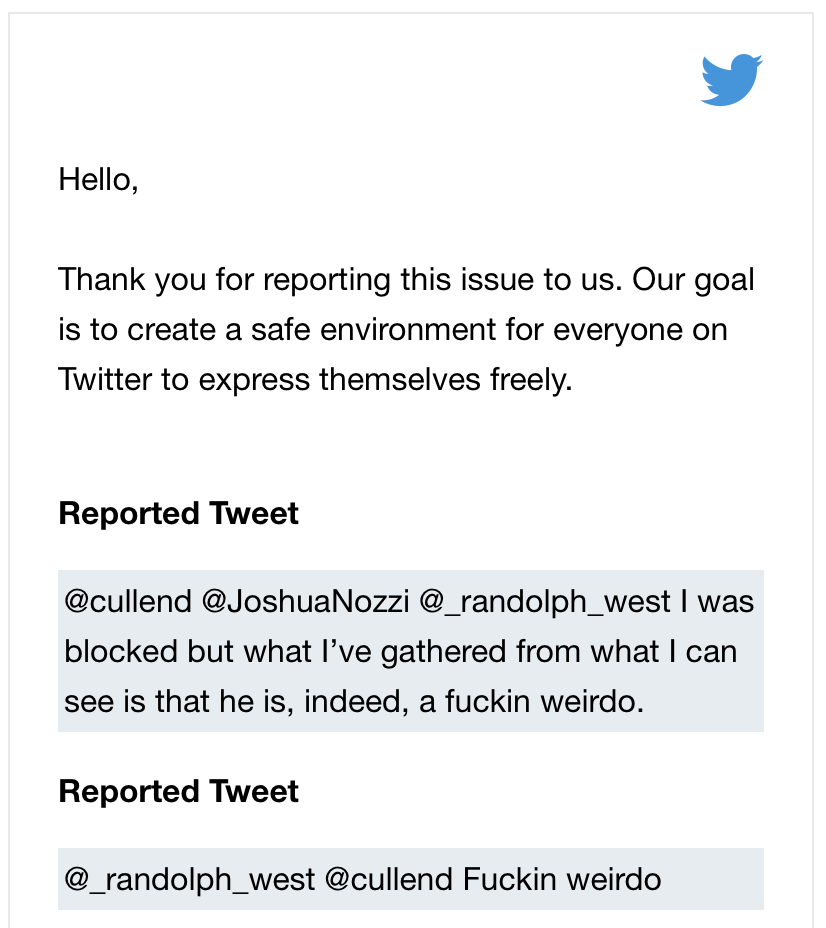

This is the part in the story where I blocked Cullen and Austin Oliver, and reported Austin for calling me a “fuckin weirdo”.

I doubt anything is going to come of this, but this behaviour is not ok. There is no need to randomly attack people you don’t know. My friend Joshua told me privately that somehow Cullen was under some misapprehension about the context of my statement (which from my point of view doesn’t make any sense) and was responding in anger, but that doesn’t excuse Austin.

Don’t be a dick. On the other hand, now maybe you know more about 4K than you wanted.

I have more problems with displays than most people, but I am certain that has to do with how much time I spend using displays and how intensely I use them. I always want “more” on every front (pixels, frequency, colors, etc.) no matter what I have right now.

There was a point in your post that confused me. By my math, a 4K display has precisely four times the pixels of an HD display,and twice the refresh rate.

Two 4K displays nearly tap the video bandwidth for my work laptop, which practically defines the limit of what I can use. That makes eight times the HD that I have when I am mobile, so using them makes it a lot easier for me to do detailed work.

Two 4K displays being a limit is definitely a first world problem, but I’d like two more if I could get them… I could get even more done!

With the 1920 width of Full HD, a 4K display of 3840 pixels across will be exactly four times the resolution, yes. However some manufacturers have a width of 4096 so it isn’t an exact fit if you happen to have a display with that resolution. It’ll be ever so slightly stretched in that case.